Six Things Individuals Hate About Deepseek

페이지 정보

본문

By spearheading the release of those state-of-the-artwork open-supply LLMs, deepseek ai china AI has marked a pivotal milestone in language understanding and AI accessibility, fostering innovation and broader purposes in the field. Understanding the reasoning behind the system's selections may very well be useful for constructing trust and further bettering the approach. If the proof assistant has limitations or biases, this could impact the system's capacity to be taught effectively. Generalization: The paper doesn't explore the system's means to generalize its discovered information to new, unseen problems. However, further research is required to deal with the potential limitations and discover the system's broader applicability. DeepSeek-Prover-V1.5 aims to deal with this by combining two highly effective strategies: reinforcement learning and Monte-Carlo Tree Search. This can be a Plain English Papers abstract of a analysis paper called DeepSeek-Prover advances theorem proving by reinforcement learning and Monte-Carlo Tree Search with proof assistant feedbac. Proof Assistant Integration: The system seamlessly integrates with a proof assistant, which gives suggestions on the validity of the agent's proposed logical steps. It’s very simple - after a very long dialog with a system, ask the system to write down a message to the subsequent version of itself encoding what it thinks it ought to know to finest serve the human operating it.

By spearheading the release of those state-of-the-artwork open-supply LLMs, deepseek ai china AI has marked a pivotal milestone in language understanding and AI accessibility, fostering innovation and broader purposes in the field. Understanding the reasoning behind the system's selections may very well be useful for constructing trust and further bettering the approach. If the proof assistant has limitations or biases, this could impact the system's capacity to be taught effectively. Generalization: The paper doesn't explore the system's means to generalize its discovered information to new, unseen problems. However, further research is required to deal with the potential limitations and discover the system's broader applicability. DeepSeek-Prover-V1.5 aims to deal with this by combining two highly effective strategies: reinforcement learning and Monte-Carlo Tree Search. This can be a Plain English Papers abstract of a analysis paper called DeepSeek-Prover advances theorem proving by reinforcement learning and Monte-Carlo Tree Search with proof assistant feedbac. Proof Assistant Integration: The system seamlessly integrates with a proof assistant, which gives suggestions on the validity of the agent's proposed logical steps. It’s very simple - after a very long dialog with a system, ask the system to write down a message to the subsequent version of itself encoding what it thinks it ought to know to finest serve the human operating it.

We ran a number of giant language models(LLM) domestically in order to determine which one is the very best at Rust programming. Here’s the very best part - GroqCloud is free deepseek for most users. They provide an API to use their new LPUs with a variety of open supply LLMs (including Llama three 8B and 70B) on their GroqCloud platform. Using GroqCloud with Open WebUI is possible due to an OpenAI-compatible API that Groq provides. Within the second stage, these experts are distilled into one agent using RL with adaptive KL-regularization. Documentation on putting in and utilizing vLLM could be discovered right here. OpenAI is the example that is most often used throughout the Open WebUI docs, nonetheless they'll help any number of OpenAI-appropriate APIs. OpenAI can both be thought-about the classic or the monopoly. Here’s another favourite of mine that I now use even more than OpenAI! This feedback is used to update the agent's coverage, guiding it in the direction of extra profitable paths. This suggestions is used to update the agent's coverage and guide the Monte-Carlo Tree Search course of.

Reward engineering is the strategy of designing the incentive system that guides an AI mannequin's studying throughout training. By simulating many random "play-outs" of the proof process and analyzing the results, the system can determine promising branches of the search tree and focus its efforts on these areas. Within the context of theorem proving, the agent is the system that's trying to find the answer, and the suggestions comes from a proof assistant - a computer program that may verify the validity of a proof. This innovative strategy has the potential to tremendously speed up progress in fields that rely on theorem proving, resembling mathematics, computer science, and past. The DeepSeek-Prover-V1.5 system represents a major step ahead in the sector of automated theorem proving. Addressing these areas may further enhance the effectiveness and versatility of DeepSeek-Prover-V1.5, finally resulting in even greater advancements in the sector of automated theorem proving. The sector of AI is rapidly evolving, with new innovations continually rising. Having these giant fashions is good, but only a few fundamental points could be solved with this. deepseek; click through the next web site, Coder V2 outperformed OpenAI’s GPT-4-Turbo-1106 and GPT-4-061, Google’s Gemini1.5 Pro and Anthropic’s Claude-3-Opus models at Coding.

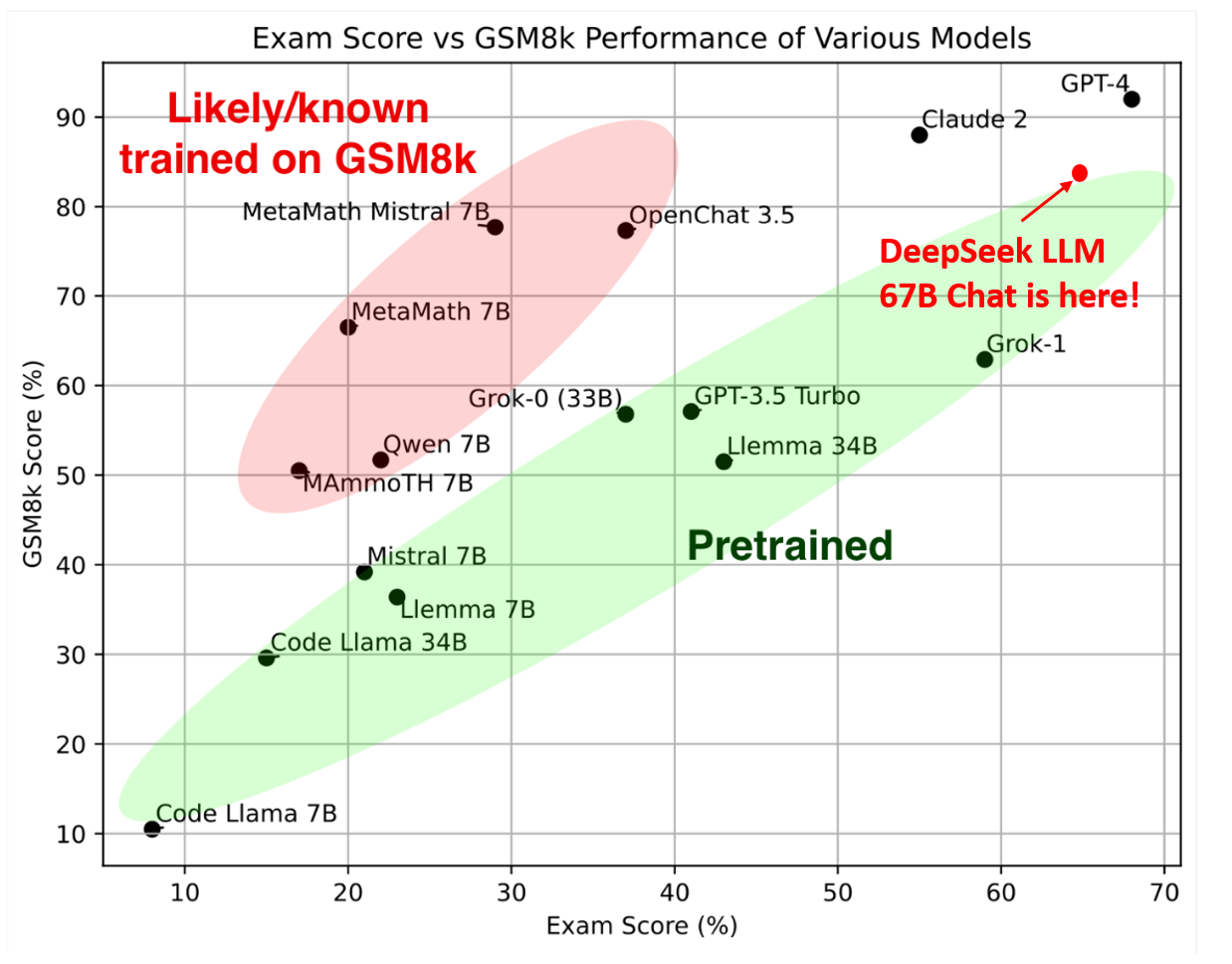

GPT-4o, Claude 3.5 Sonnet, Claude 3 Opus and free deepseek Coder V2. Also, for instance, with Claude - I don’t think many individuals use Claude, but I exploit it. Regardless that Llama 3 70B (and even the smaller 8B mannequin) is ok for 99% of people and tasks, typically you simply need the perfect, so I like having the option both to just rapidly answer my question or even use it along aspect other LLMs to rapidly get choices for an answer. Their claim to fame is their insanely quick inference occasions - sequential token technology within the tons of per second for 70B models and 1000's for smaller fashions. I take pleasure in offering models and helping people, and would love to be able to spend even more time doing it, as well as increasing into new tasks like fantastic tuning/training. Here’s Llama 3 70B working in actual time on Open WebUI. AI observer Shin Megami Boson, a staunch critic of HyperWrite CEO Matt Shumer (whom he accused of fraud over the irreproducible benchmarks Shumer shared for Reflection 70B), posted a message on X stating he’d run a private benchmark imitating the Graduate-Level Google-Proof Q&A Benchmark (GPQA).

- 이전글The Brilliance Of Ho Chi Minh City (Saigon) 25.02.12

- 다음글Рацион в сухостойный период — Ветеринарная служба 25.02.12

댓글목록

등록된 댓글이 없습니다.